Editor’s note: This article was published under our former name, Open Philanthropy.

Advances in frontier science and technology have historically been the key drivers of massive improvements in human well-being. Safety is sometimes portrayed as just an impediment to that progress, but we believe safety is both itself a key kind of progress and a precondition for ongoing innovation, especially when it comes to unprecedented breakthroughs like artificial general intelligence (AGI). While there are tensions to navigate, safety and progress at their best are mutually reinforcing, and both are essential to realizing the profound potential benefits of transformative new technologies.

Introduction

Open Philanthropy’s mission is to help others as much as we can with the resources available to us. We do that by working across worldviews, which each have an associated structural thesis for why it might represent an outstanding philanthropic opportunity. For instance:

- Accelerating technological and economic growth at the global frontier. Over the last few hundred years, scientific and technological progress has been the root cause of a profound and interrelated set of improvements, such as much higher standards of living, extended lifespans, and reductions in child mortality. But because these new ideas are prototypical global public goods that no particular actor or even country can fully capture, markets and governments systematically undersupply them, and there’s space for outstanding philanthropy.

- Preventing global catastrophic risks, especially from advanced AI and biotechnology. We have strong evidence from the history of humans and other mammals that natural risks to our survival as a species are relatively small. But a narrow slice of new technologies could present unprecedented catastrophic risks. In part because these risks are global and unprecedented, they don’t have a large natural market or democratic constituency. That neglect by other actors, in addition to the potentially enormous stakes, makes addressing these risks a good fit for philanthropy.

- Improving health and incomes for the poorest people in the world. Because these people don’t have much money themselves or the ability to vote in wealthy countries, their interests are neglected. As a result, direct services like providing bednets to prevent malaria and vitamin A supplementation, as well as leveraged research and policy advocacy, can be very cost-effective for philanthropy (e.g. a few thousand dollars to save a life). Historically, this has been our biggest focus.

This post focuses on how we think about the relationship between #1 and #2, especially when it comes to AI, which looks both like an enormously promising new technology for unlocking economic growth and a source of potentially unprecedented risks to humanity.

In short, we think that advancing science and technology is among the best ways to improve human well-being. While there are tensions to navigate between safety and progress, we believe that they can be mutually reinforcing and that both are essential to realizing the profound benefits of new technology.

The rest of this post covers:

- Why and how we invest in accelerating global technological and economic growth. (More)

- How safety itself can represent a form of technological progress. (More)

- How improvements in safety can accelerate progress (through preserving social license to operate and directly making new technologies more useful). (More)

- How we handle remaining tensions and uncertainties. (More)

- How all of this applies to our approach to AI, which we think represents the prospect for both unprecedented benefits and unprecedented risks. (More)

Why and how we invest in accelerating scientific and technological progress and economic growth more broadly

Advancements in science and technology have been essential drivers of improved human well-being over the last few centuries. Medical technologies, like vaccines and antibiotics, have saved hundreds of millions of lives. The Green Revolution, driven by innovations in plant breeding, fertilizer, and irrigation, may have saved over a billion people from starvation. Electricity and clean water infrastructure, much of it enabled by innovations in engineering, has dramatically reduced child mortality.

Scientific and technological progress are also the primary engine of long-run economic growth, which has lifted billions out of poverty and continues to raise living standards worldwide. As we’ve discussed elsewhere, economic growth is driven in large part by new ideas, like treating infections with penicillin or developing nitrogen fertilizers to increase crop yields, that can be shared and applied by multiple people at once, serving as canonical global public goods. Although these estimates of course come with enormous uncertainty, Ben Jones and Larry Summers calculated that each $1 invested in R&D yields a social return of ~$14. Work by our colleagues Tom Davidson and Matt Clancy found even larger returns when accounting for the global spillover of ideas from one country to another — enough so that the best individual projects and leveraged efforts (e.g. advocacy to improve scientific funding practices overall, or for more public R&D dollars) are above our cost-effectiveness bar.

When there is a well-functioning market, companies can and will invest with the hope of capturing profits. A salient example over the last few years is the enormous progress in weight loss drugs, driven by significant investments from large pharmaceutical companies.

But market or policy failures often prevent profit incentives from working:

- Early-stage R&D, like solar in the early 2000s or advanced geothermal now, often has high social returns but low private returns. Accordingly, almost no one has strong profit incentives to do it, or to even advocate for public support for it.

- Many people would like to move to more productive regions where they’d make more money and innovate faster (both globally and in the U.S.), but restrictions on housing construction make it too expensive. Existing residents vote to limit new housing, but the people who need that housing can’t vote because they don’t live there yet.

- Some technologies are underfinanced because their benefits spill over across borders to populations that lack market power. For instance, mRNA vaccine development was underfunded relative to its potential to reduce disease burden in rich countries, but even more so when accounting for its potential in poor countries.

The history of philanthropy shows that often, there are outsized philanthropic opportunities to respond to these failures. This is consistent with our experience over the last decade:

- Our Innovation Policy portfolio supports think tanks, researchers, and advocates to accelerate growth and improve the scientific ecosystem. We’ve supported progress-oriented think tanks like the Institute for Progress and Foundation for American Innovation, as well as “metascience” organizations like the Institute for Replication. We also support the Progress Studies movement to help build an ecosystem of people that care about these issues.

- Our Housing Policy Reform work, which we’ve funded since 2015, seeks to unlock economic growth by reducing local barriers to housing construction. For example, our early support for grassroots organizing and coalition-building groups like Open New York contributed to major policy wins like the “City of Yes” initiative, New York’s largest zoning overhaul in 60 years. In addition to lowering rents, reducing homelessness, and raising incomes directly, making it easier to build in cities can also accelerate innovation.

- Our Science and Global Health R&D programs accelerate medical breakthroughs, primarily but not exclusively for diseases that mostly affect the global poor, for which market incentives are comparatively weak. We’ve supported the ongoing Phase 3 trial for the MTBVAC tuberculosis vaccine, and the Phase 3 trials for the R21 malaria vaccine that our program officer Katharine Collins co-invented as a grad student. We’ve funded multiple different strategies to research novel gene drives for malaria control.

A common thread running through this work is captured well by Caleb Watney of the Institute for Progress: “The U.S. is the R&D lab for the world and we should act like it.”

How safety itself represents a form of technological progress

Because some innovations are “dual-use” (capable of serving both beneficial and destructive purposes), improvements at the frontier of science and technology require concomitant advancements in safety to actually translate into real-world benefits. Jason Crawford, one of the central advocates for the field of Progress Studies, articulated this idea as safety itself being a form of progress:

All else being equal, safer lives are better lives, a safer technology is a better technology, and a safer world is a better world. Improvements in safety, then, constitute progress.

Sometimes safety is seen as something outside of progress or opposed to it. This seems to come from an overly-narrow conception of progress as comprising only the dimensions of speed, cost, power, efficiency, etc. But safety is one of those dimensions.

Of course, it is intrinsically valuable to prevent large-scale global catastrophes, and that is the central reason we invest in AI safety. But safety is also a crucial bridge between technological advancement and human well-being. Even if someone is only concerned with advancing technological capabilities as much as possible, they should want a nontrivial portion of society’s resources devoted to guarding against extreme risks.

How improvements in safety can advance progress

Better safety mechanisms can advance science and technology in several ways.

First, there needs to be a baseline level of safety to avoid truly unprecedented risks that jeopardize progress altogether. We will not unlock the full promise of frontier technologies like biotechnology and AI if we face a sufficiently damaging global catastrophe caused by engineered pandemics or AI loss-of-control.

Second, effective safety and security measures for new technologies are often critical to accelerating technology adoption, and so neglecting safety can backfire and stunt progress. The 1979 Three Mile Island partial nuclear meltdown in Pennsylvania was caused in part by a lack of operational safety measures. It triggered a massive public backlash that led to no new nuclear plants coming online for 30 years, even though nuclear power is one of the safest forms of energy. Accidents in Chernobyl and Fukushima prompted similar reactions worldwide.

Third, there are often positive spillovers between advancing technological safety and accelerating the underlying technology, often by making the technology more useful and functional. Self-driving cars are a central example: enormous strides in safety have been a key part of their progress in recent years. This feedback loop sometimes works the other way as well: better technological capabilities can unlock new safety techniques. For instance, metagenomic sequencing, which can be used to detect a wide range of novel pathogens in wastewater, has only been made feasible thanks to the development of high-throughput sequencing machines that can read billions of genetic fragments at a relatively low cost. Advancements in AI capabilities have also made it possible to investigate safety questions empirically. For instance, Theorem, a new Y Combinator-backed start-up, is using AI to scale formal verification methods that could make trustworthy-by-default code that is more secure to vulnerabilities.

Finally, in their most sophisticated forms, safety systems are genuine technological marvels. For instance, it was a feat of progress not just that we were able to land astronauts on the moon, but that we developed the engineering and logistical systems that allowed them to return to Earth. Similarly, we think that addressing the most urgent safety imperatives today, like ensuring that smarter-than-human AI systems don’t act deceptively and can’t be easily misused by bad actors or authoritarian regimes, would be a remarkable technological accomplishment — on par with moonshots like nuclear fusion, quantum computing, and going to Mars.

Remaining tensions and how we handle them pragmatically

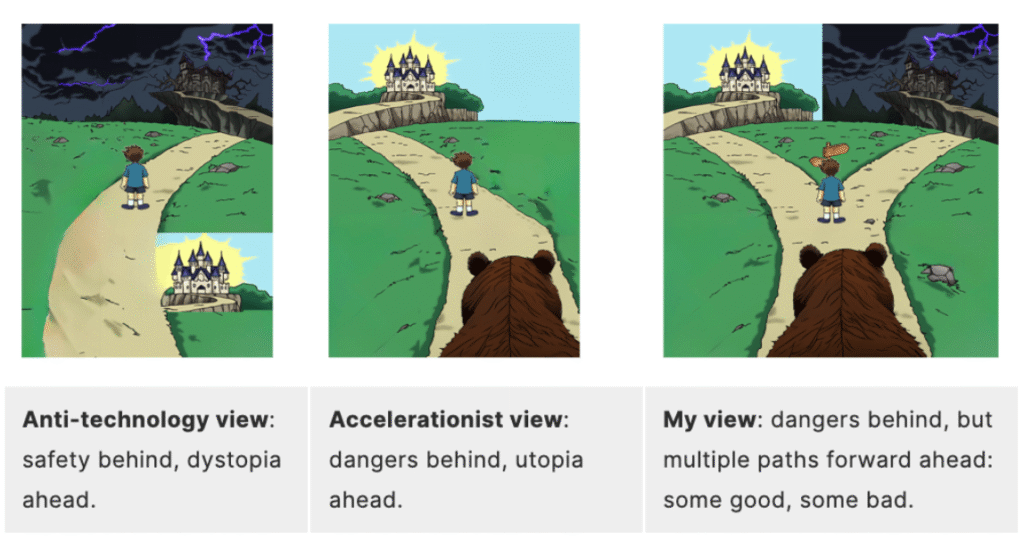

Even though we think safety is a part of progress and that safety can be mutually reinforcing with advanced capabilities, there can be genuine tensions between the two.

First, concerns about safety can plainly go too far, leading to undue restrictions on science and technology. This is the case today with nuclear energy, though recent years have seen increasing momentum toward using more of it. At the extreme, “safety” concerns that have little basis can generate resistance to hugely beneficial and extremely well-understood technologies like vaccines or genetically modified crops. Of course, it’s also possible to fail in the other direction and neglect safety too much at the outset. This was plausibly the case when the U.S. lifted its funding ban on biological gain-of-function research in 2017 despite the considerable risks it posed by enabling experiments that could create pandemic-level pathogens. (The funding ban was reinstated in May 2025.)

Second, even initially well-motivated safety efforts can become outdated and fail because they don’t evolve with technological change, get misapplied in practice, or get the details wrong in the first place. One example of this is the system of Institutional Review Boards (IRBs), which were established to oversee human subjects research in the wake of serious ethical violations throughout the 20th century. While IRBs have played an important role in preventing abuses, ethics preapproval requirements today often create months-long delays for high-impact, low-risk research, including in the social sciences. Another example is the National Environmental Protection Act (NEPA), which began in 1970 to counter high rates of air and water pollution but now often delays or blocks even clean energy projects with lengthy and litigation-prone environmental reviews, clearly undermining its original intent.

Third, market pressures can push companies to create risky technologies without internalizing the societal costs. A classic example is chlorofluorocarbons (CFCs), which depleted the ozone layer and threatened to cause dramatic increases in skin cancer and cataracts. No single company or country had an incentive to unilaterally stop producing CFCs, so government action was required to bring the risks under control. In other cases, companies can make changes to improve safety and security on the margin, but doing so would eat into scarce resources and be a drag to maintain, so they neglect safety at great societal cost. Information security at AI labs, which requires time and effort from researchers that could otherwise be spent on advancing capabilities, is one example: as Miles Brundage recently wrote in a piece on the risks of unbridled AI competition, “frontier companies are years away from reliably defending themselves effectively against sophisticated state attackers, a timeline that compares unfavorably with these companies’ stated expectations of building extremely capable systems in a matter of months to years.”

Fourth, and perhaps most fundamentally, future discoveries are a black box; we don’t and can’t know the full risks that future technologies may pose. Michael Nielsen has written compellingly about this central tension:

The underlying problem is that it is intrinsically desirable to build powerful truthseeking [artificial superintelligence], because of the immense benefits helpful truths bring to humanity. The price is that such systems will inevitably uncover closely-adjacent dangerous truths. Deep understanding of reality is intrinsically dual use. We’ve seen this repeatedly through history – a striking case was the development of quantum mechanics in the early twentieth century, which helped lead to many wonderful things (including much of modern molecular biology, materials science, biomedicine, and semiconductors), but also helped lead to nuclear weapons. Indeed, you couldn’t get those benefits without the negative consequences. Should we have avoided quantum mechanics – and much of modern medicine and materials and computation – in order to avoid nuclear weapons? Some may argue the answer is “yes”, but it’s a far from universal position. And the key point is: sufficiently powerful truths grant tremendous power for both good and ill.

John von Neumann, who worked on the Manhattan Project, made a similar point in his 1955 essay “Can We Survive Technology?”

The very techniques that create the dangers and the instabilities are in themselves useful, or closely related to the useful … Technological power, technological efficiency as such, is an ambivalent achievement. Its danger is intrinsic.

The dual-use nature of fundamental scientific progress belies any simplistic tribal response. It calls for investments in the right kinds of institutions that can deliver the safety and security needed to keep pace with our rapidly expanding knowledge without unduly hampering progress.

We don’t have uniform or simple answers to these tensions, and we spend significant time and effort thinking about and debating them. For instance, our colleague Matt Clancy wrote a report entitled “The Returns to Science in the Presence of Technological Risks”, which compared traditional health and income benefits of science to potential costs, using the results of a recent forecasting tournament about new dangers associated with technological progress. That report’s conclusions were nuanced: setting aside tail risks — which are hard to forecast but have very large impacts on society — it found the benefits of science far outweighed the forecasted costs. But there is no principled reason to set aside tail risks, even if they are hard to forecast. Moreover, our Biosecurity and Pandemic Preparedness team thinks that the forecasting estimates Matt used to estimate future biological risks badly underestimate the risks in coming decades. (We think that view is unusually plausible, given the team’s expertise and evidence that has become public since the original forecasts were made.)

In practice, when these tensions come up in our grantmaking we do our best to find a pragmatic balance. For instance, our Abundance and Growth Fund, which Matt leads, includes work on “metascience,” or the study of how to improve the way science is funded and conducted, including for biology. While we believe this work is a leveraged way to eventually increase beneficial outcomes from biotechnology, it also could plausibly increase the likelihood of new biological risks from engineered pandemics. To address this tension, we decided to devote ~20% of our metascience portfolio to work that is directionally positive (though not optimized) from a biosecurity perspective, which we believe is enough to make the whole portfolio net-positive on biosecurity grounds.

How all of this applies to our work on AI specifically, which we think could bring both unprecedented benefits and unprecedented risks

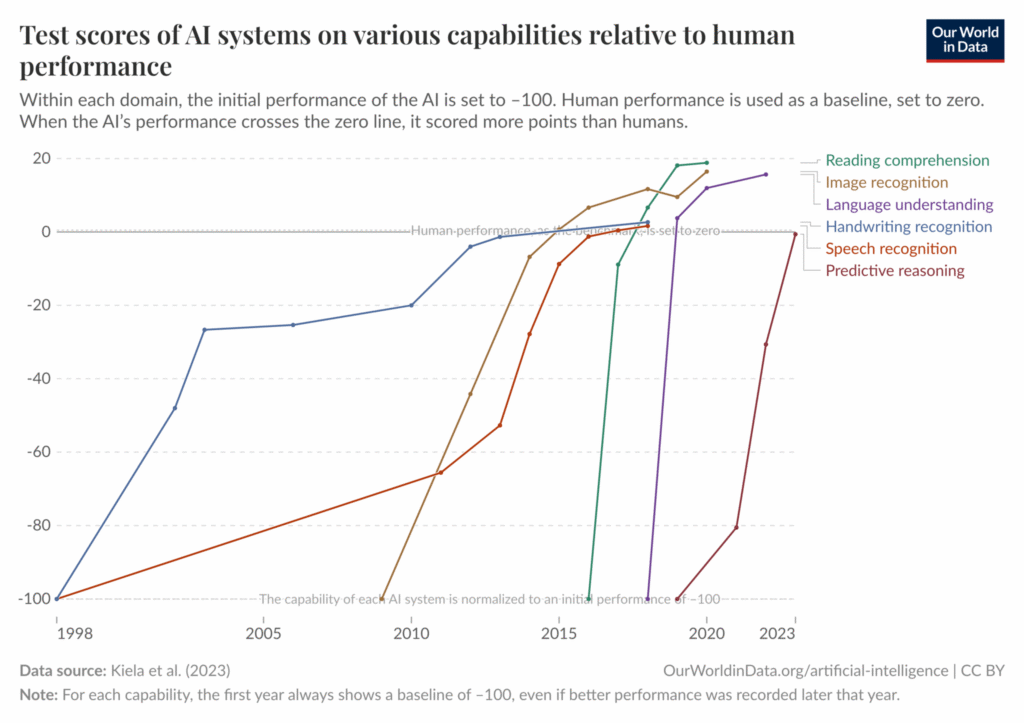

We think a lot about the relationship between safety and progress for AI, where capabilities have accelerated so quickly over the last decade that scientists struggle to create capability benchmarks that aren’t quickly made obsolete.

As philanthropists, we look for market failures around accelerating the most socially promising areas in science and technology. There are very strong commercial incentives to accelerate the underlying AI capabilities of foundation models, but comparatively weak incentives to support certain neglected policy areas around AI progress in the U.S. and other democracies, as well as AI applications for beneficiaries who lack market power. To capture this upside, we have made grants like:

- Funding Nobel Prize winner David Baker’s work on using AI to create vaccines against diseases, as well as other threats like syphilis. Baker’s lab uses AI tools to build protein structures that meet particular specifications, like binding to specific venom toxins to neutralize snakebites.

- Supporting research and advocacy on high-skill immigration through our Abundance and Growth Fund, to make sure that the U.S. and other highly productive democracies have access to the best AI talent in the world.

We think the most serious market failures, however, relate to the market overindexing on accelerating AI capabilities and underindexing on safety and security. Vitalik Buterin describes the broad dynamic well:

The world over-indexes on some directions of tech development, and under-indexes on others. We need active human intention to choose the directions that we want, as the formula of “maximize profit” will not arrive at them automatically.

For AI, we think defensive advancements in safety and security are systematically underinvested in by companies and governments for several reasons:

- Catastrophic AI risk mitigation is a public good. Because the benefits of reducing risks from AI are widely shared, companies don’t have strong enough commercial incentives to fund them at socially optimal levels.

- Competitive dynamics can create local incentives to underinvest in AI safety. Leading AI companies are under strong financial pressure to accelerate capabilities and bring products to market, which creates incentives to cut corners on safety. Many current and former employees at leading AI companies (in private and in public) have said that they feel trapped in a “regretful racing” dynamic, where they would prefer to move more cautiously but feel that other companies are speeding ahead anyway.

- Governments and civil society have been slow to respond to rapid AI progress. Institutions haven’t kept pace with the speed of AI development, creating a gap in governance (i.e. the “pacing problem”).

Even as we think that the median outcome from AI advancement is likely (very) positive, we focus the bulk of our AI work on guarding against downside risks of new technologies. That is because: (a) the worst case scenarios are so bad; (b) market forces are so woefully neglecting the opportunities to mitigate them; and (c) we are very small relative to the overall space of AI funding, research, and policy.

Next time

This is Part 1 in a three-part series. While this part focused on why, Part 2 explains how we aim to reduce worst-case risks from advanced AI. Part 3 makes the case that now is a uniquely high-impact time for philanthropic funders to support AI safety and security.